Can you fix algorithmic bias?

Make it a career at UniSA

Exhibit Details

- In Brief

- Want More?

- Accessible Resources

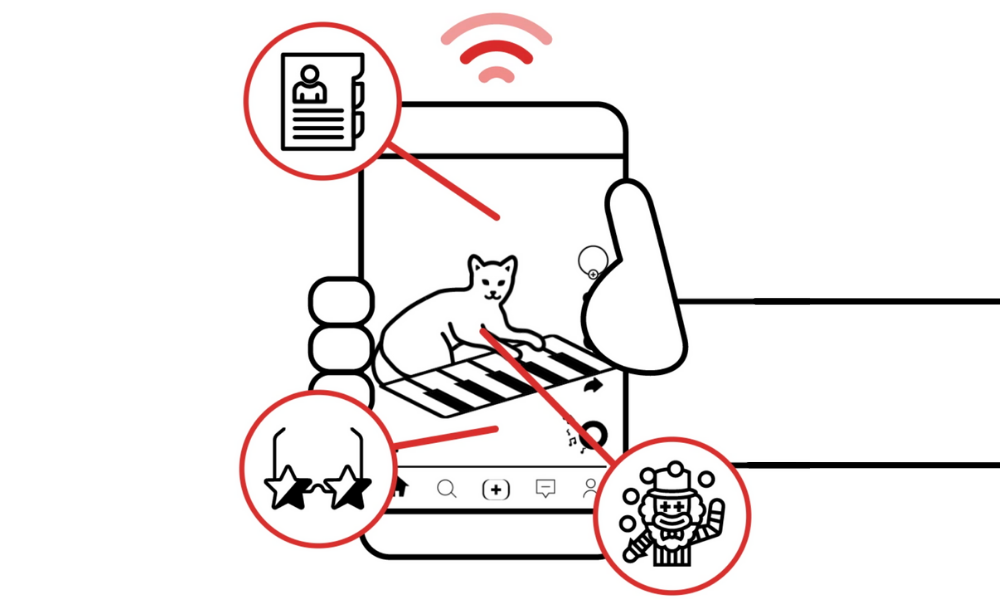

Over 33,000 people looked at photographs of faces and judged them. They decided how calm or kind or weird they were. An algorithm was fed all this information, which it will now use to make judgements about you.

Can algorithms make accurate judgements about us based on our faces? No. They inherit all the same biases that humans have. But does this stop companies from using these algorithms to make decisions about us every day? Of course not.

Mirrors that know more than humans have been a mainstay of stories and fairy tales for generations. But today mirrors promise to do more than tell your future. They can now read your face to determine who you really are inside. How accurate is all of this? Not very.

Let’s take it back a step. The algorithm that powers the Biometric Mirror is accurate and correct. It displays the public perception of your face, based on the way that many people judged more than 2000 faces. But the information the mirror then feeds you about yourself isn’t necessarily accurate at all.

Don’t think of the Biometric Mirror as a tool for psychological analysis. Just because the algorithm thinks you look humble, it doesn’t necessarily mean you are. Instead, we ask that you reflect on the whole experience. Why believe this artificial intelligence when it says you’re calm? And how could the way that your eyebrows meet your nose determine that anyway?

We should be careful when relying on AI, especially when the code is built based on the beliefs or decisions of humans. When we depend on this, it just means that all of those biases get transferred from the human into the algorithm. And that’s how we end up with Twitter cropping black people out of photos or Amazon hiring men over women.

Discover More:

Watch:

Read:

- Holding up a black mirror to Artificial Intelligence

- The implications of automated decision-making through systems

- Another report on the implications of these decision-making systems

- More information on Biometric Mirror

Listen:

Audio description (for both Biometric Mirror and Mirror Ritual):

Credits

- Lucy McRae Artist

- Niels Wouter Resarch

- Jimy McGilchrist Exhibition Build

- Marc Mahfoud Soundscape

- Sandpit Digital Developer

- Biometric Mirror was originally commissioned by Science Gallery Melbourne